Every time you ask an AI to write an email, generate an image, or translate a sentence, massive data centers packed with powerful chips hum to life. This digital gold rush, powering a market set to smash through $1 trillion by 2030, has made companies like NVIDIA household names, with their GPUs controlling over 80% of the AI chip space. But this incredible progress comes at a steep price, one that is measured in terawatts.

AI's insatiable appetite for energy is creating a sustainability crisis. The very computer architecture that launched this new era is now its greatest liability. To keep the revolution going, the industry is turning to an unlikely hero: a forgotten technology that’s making a radical comeback.

The problem is a 75-year-old design flaw called the von Neumann bottleneck. In nearly every digital chip today, the processor (the workshop) is physically separate from the memory (the library). To get any work done, data has to be constantly shuttled back and forth along a tiny, congested road.

For AI models with billions of parameters, this is a traffic jam of epic proportions. The processor spends most of its time idle, just waiting for data to arrive. Worse, the energy spent moving all that data is orders of magnitude higher than the energy used for the actual computation.

The real-world numbers are mind-boggling:

This isn't just bad for the planet; it's a roadblock to innovation. The astronomical energy costs keep powerful AI locked in the cloud, making it nearly impossible to run sophisticated intelligence on small, battery-powered devices like your phone or watch.

To break free from this bottleneck, engineers are reviving a brilliant, old-school idea: analog computing. Instead of processing rigid 1s and 0s, analog chips work with a continuous range of physical values, like the subtle shifts in a voltage. This has enabled a game-changing architecture called in-memory computing (IMC), which completely redesigns the digital traffic jam. With IMC, the workshop is built inside the library. AI model weights are stored as physical properties—like the resistance of a memory cell—and the math happens right there, instantly, governed by the laws of physics. The endless, energy-wasting data shuttle is eliminated. The result is a potential for orders-of-magnitude improvements in power efficiency. It’s a paradigm shift that allows AI tasks to be completed with the same accuracy as digital chips, but at a fraction of the energy cost. This is where pioneers like Vellex Computing are stepping in to rewrite the future of AI hardware. Instead of trying to make the digital traffic jam slightly more efficient, Vellex is eliminating the road entirely.

At the heart of Vellex's platform is its analog compute engine, a specialized architecture that performs the core mathematical operations of AI—billions of multiply-accumulate operations—directly inside the memory array. Here’s how it works:

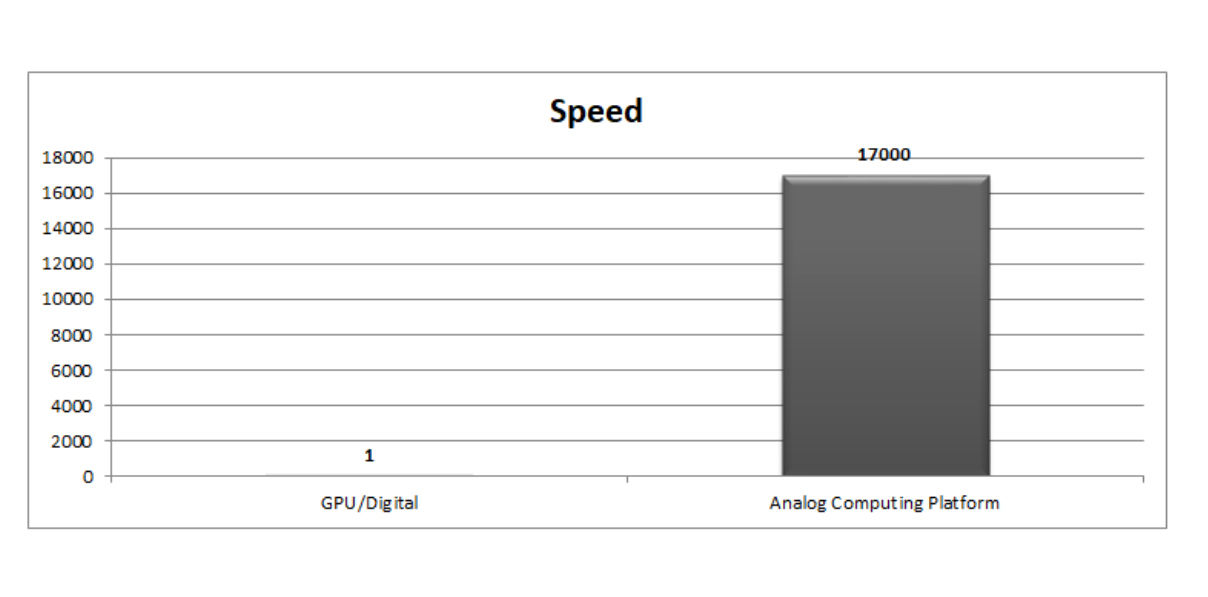

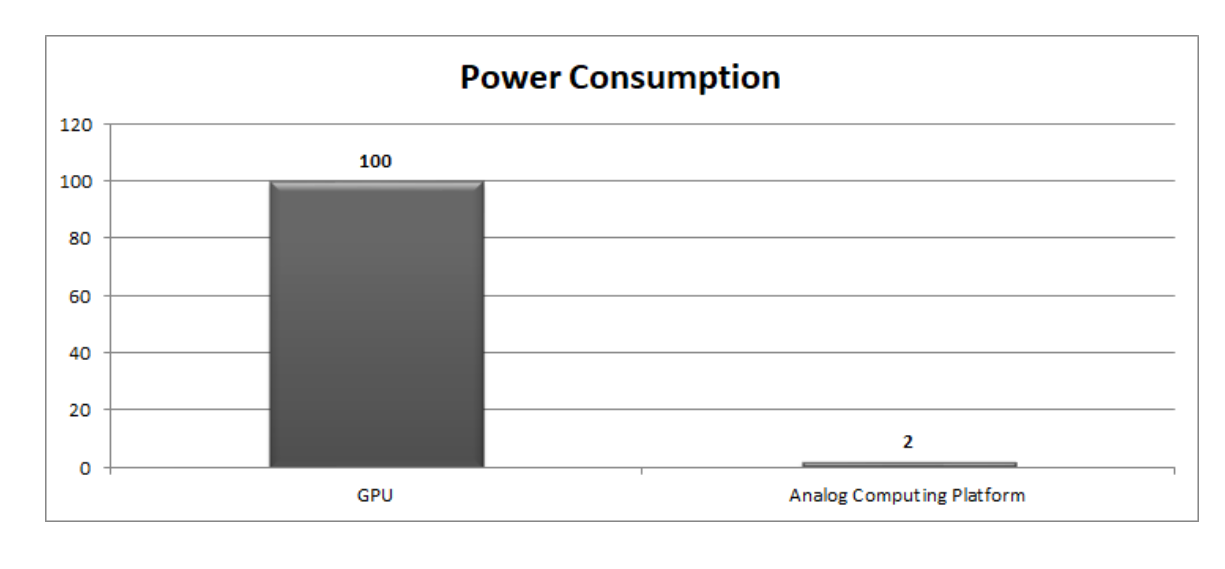

By removing the separation between memory and processing, Vellex's approach delivers two game-changing benefits:

Taking this concept even further is neuromorphic computing—a field dedicated to building chips that are directly inspired by the architecture of the human brain.

These chips use "spiking neural networks" that operate on an event-based model. Like our own neurons, they only fire—and consume power—when there's new information to process. For the rest of the time, they are in an ultra-low-power state. This is perfect for the "always-on" AI that we increasingly want in our lives, from a smart speaker listening for a wake word to a wearable monitoring our health. Companies are already producing neuromorphic chips that run on sub-milliwatt power, paving the way for devices with batteries that last for months or even years.

While massive digital GPUs will continue to power the data centers that train tomorrow's giant AI models, the next explosion of growth is happening at the edge—on the billions of smartphones, PCs, cars, and sensors that surround us.

On these devices, the game isn't about raw speed; it's about efficiency. A chip that delivers a 100x improvement in performance-per-watt is infinitely more valuable than one that's marginally faster but kills a battery in an hour.

This is where analog and neuromorphic chips are set to dominate. By solving AI's energy crisis, this resurrected technology is finally unlocking the true promise of AI: not just as a powerful tool in the cloud, but as an intelligent, efficient, and sustainable presence in every part of our lives.

READ MORE