For decades, progress in computing has been synonymous with digital scaling - faster transistors, denser chips, more powerful clusters. But as AI workloads surge, this once-reliable trajectory is colliding with the limits of physics and energy.

The next frontier in performance will not come from squeezing more logic into silicon - it will come from computing with physics itself.

Analog computing represents that shift. It’s not just a faster engine; it’s a fundamentally more efficient model of computation - one that aligns with the physical realities of both data and energy.

Artificial Intelligence is now one of the largest consumers of compute resources in human history.

Training state-of-the-art models has become a power-intensive industrial process, rivaling small nations in electricity use.

The result: a compute-energy-carbon bottleneck that threatens both the economics and sustainability of innovation.

In the digital era, performance has traditionally been defined by FLOPS — raw computational throughput.

But for modern AI and HPC, that metric has become incomplete. The true measure of performance now exists within what can be called the Compute–Energy–Carbon Triangle:

Current HPC systems optimize primarily for compute, often at the expense of energy and carbon efficiency.

Yet each dimension is now economically linked: higher compute → higher power → higher cost and carbon liability.

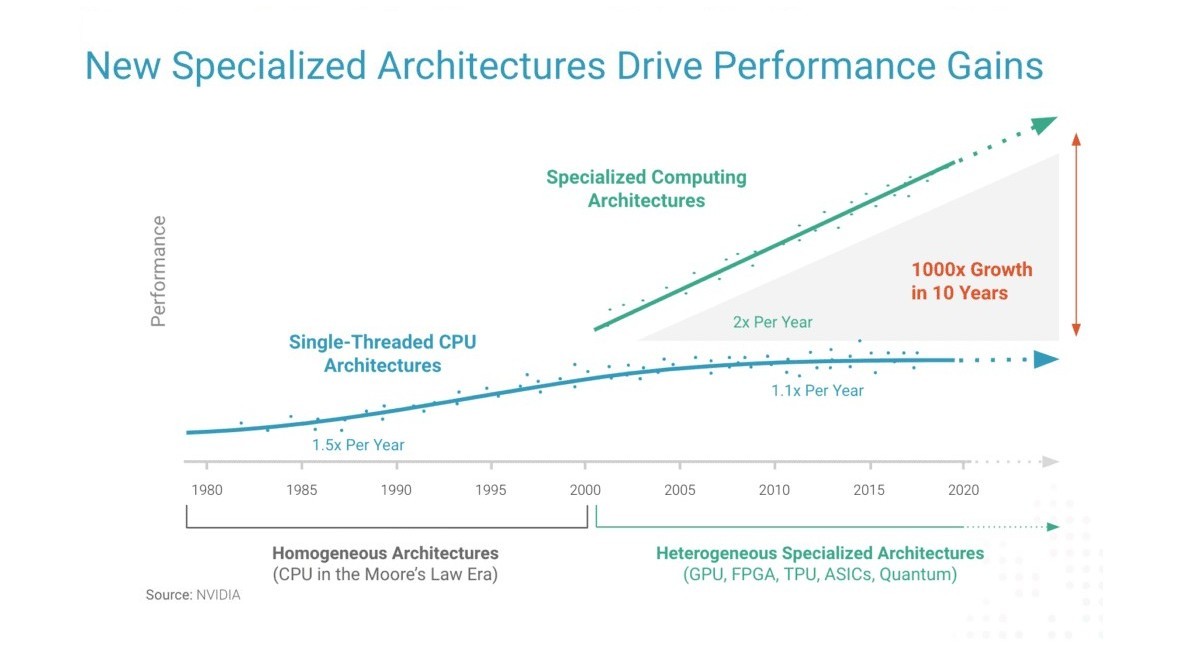

The digital world is built on a simple promise - every two years, chips get smaller, faster, and cheaper.

That promise has broken down.

Moore’s Law and Dennard Scaling - the dual engines of progress for 50 years - have effectively plateaued:

In essence, we are burning vast amounts of energy to move zeros and ones around.

The architecture of modern HPC rooted in von Neumann’s separation of memory and compute is becoming an energetic liability.

It’s a 20th-century design being pushed to its 21st-century limits.

Analog computing challenges that architecture entirely.

Instead of abstracting away physics, it computes with it.

Where digital processors represent information as discrete bits, analog systems represent and manipulate continuous quantities — voltages, currents, or waveforms — that inherently model real-world dynamics.

This leads to three defining advantages:

The outcome is not just faster computing, it’s computing with radically higher energy proportionality.

You get performance that scales with physics, not against it.

For enterprises and governments betting their future on AI, HPC is no longer a back-end utility — it’s a core strategic asset.

But the economics of that asset are changing fast.

The financial logic is straightforward: when power defines cost, efficiency defines advantage.

Analog computing redefines the compute cost curve, transforming energy from a constraint into a competitive differentiator.

Analog will not replace digital; it will redefine its boundaries.

The future of high-performance computing is hybrid - a stack where digital logic ensures precision, and analog accelerators deliver efficiency for continuous, physics-heavy workloads such as:

Such architectures could cut AI training energy by 100× and reduce inference costs by 90%, making intelligence not just faster but economically and environmentally sustainable.

This convergence -HPC, AI, and analog - will mark the most profound transformation in computation since the advent of silicon itself.

In the coming decade, the world will no longer measure supercomputers solely in FLOPS.

We’ll measure them in efficiency per watt how intelligently they convert energy into insight.

Analog computing is uniquely positioned to lead that transition. It aligns with both the physics of computation and the economics of sustainability.

For business leaders, embracing it early is not just a technological choice, it’s a strategic hedge against the rising cost of intelligence.

The future of high-performance computing won’t be built by pushing electrons faster through digital gates.

It will be built by computing directly with the laws of nature - efficiently, continuously, and analogically.

READ MORE